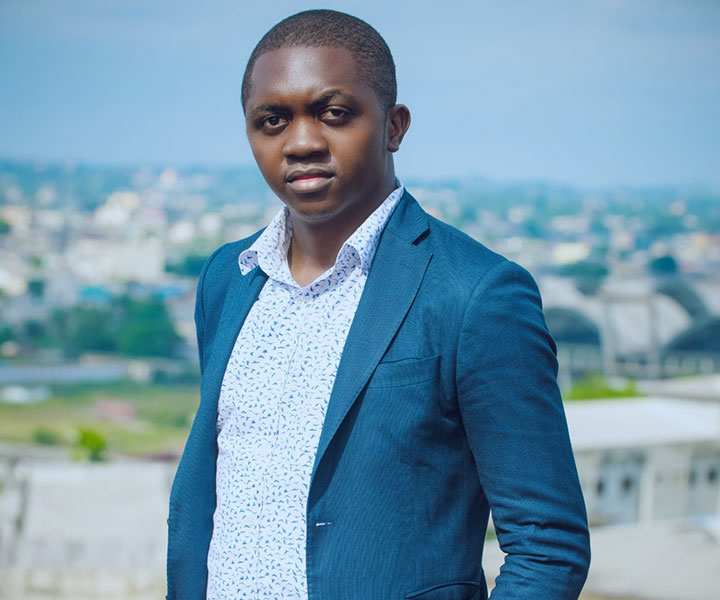

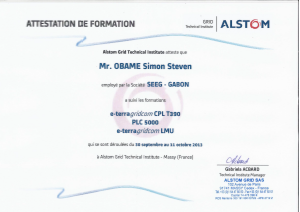

Simon S. OBAME

Data Analysis Project Manager

Hi! My name is Simon, I'm a Data Scientis, Project Manager with SEEG with over 12 years of experience in the energy and water sector.

DOB: October 28, 1985

Email : contact @ stevenobame.com

Website: www.stevenobame.com